At CVAT, we know how important quality is when it comes to labeling large volumes of data. A small mistake can have serious consequences, such as a misclassified traffic light impacting autonomous vehicle safety or mislabeled medical images impacting patient care. That's why validation is crucial to ensure annotations meet quality standards before training AI models.

To help our customers maintain high labeling standards, CVAT already supports multiple validation approaches, including manual reviews and Ground Truth (GT) jobs. While both methods deliver excellent accuracy, they come with significant trade-offs: manual reviews require a lot of expert time, and GT jobs need carefully curated validation sets — making them expensive, time-consuming, and hard to scale for large volumes of data.

That’s why we’re excited to introduce Honeypots — a powerful new addition to CVAT’s automated quality assurance workflow. Designed for scalability, honeypots make it possible to validate large datasets more efficiently and cost-effectively, especially when traditional methods become impractical.

What are Honeypots?

Honeypots are a smart way to monitor annotation quality without disrupting your team’s workflow. It works by randomly embedding extra validation frames—so-called “honeypots”—directly into your labeling tasks.

With this validation mode, annotators don’t know which frames are being checked, so you can measure attentiveness, consistency, and accuracy in a completely natural and unobtrusive way.

Why and When Use Honeypots?

Quality assurance in data labeling traditionally requires significant resources — either extensive manual reviews or large validation sets. Honeypots offer a more scalable and efficient solution that maintains high standards while reducing overhead.

Consider a medical imaging project with 10,000 images. Traditional validation might use 100 expert-annotated images (1% of the dataset) for quality checks—far from ideal when patient care is at stake.

This is where Honeypots transform the validation process. Instead of using those 100 validated images just once, Honeypots let you embed them multiple times throughout your annotation pipeline, achieving 5-10% validation coverage without requiring additional expert input.

By embedding a small, independent validation set randomly throughout your project, Honeypots' approach ensures that even the largest datasets stay under consistent quality control without requiring expensive and time-consuming Ground Truth validation for every batch.

And, since Honeypots reuse the same validation set across multiple jobs, they scale automatically as your project grows, so you don’t need to generate new validation data for each task. This dramatically reduces both validation time and cost while maintaining high-quality standards.

Combined with Immediate Feedback, this validation technique creates a highly reliable yet cost-effective validation system that scales effortlessly with your projects.

How Honeypots Work

As Honeypots are based on the idea of a validation set — a sample subset used to estimate the overall quality of a dataset—you’ll need to create a Honeypots validation set first when creating your task and select your validation frames. The whole validation set is available in a special Ground Truth job, which needs to be annotated separately.

Note:

- It’s not possible to select new validation frames after the task is created, but it’s possible to exclude “bad” frames from validation.

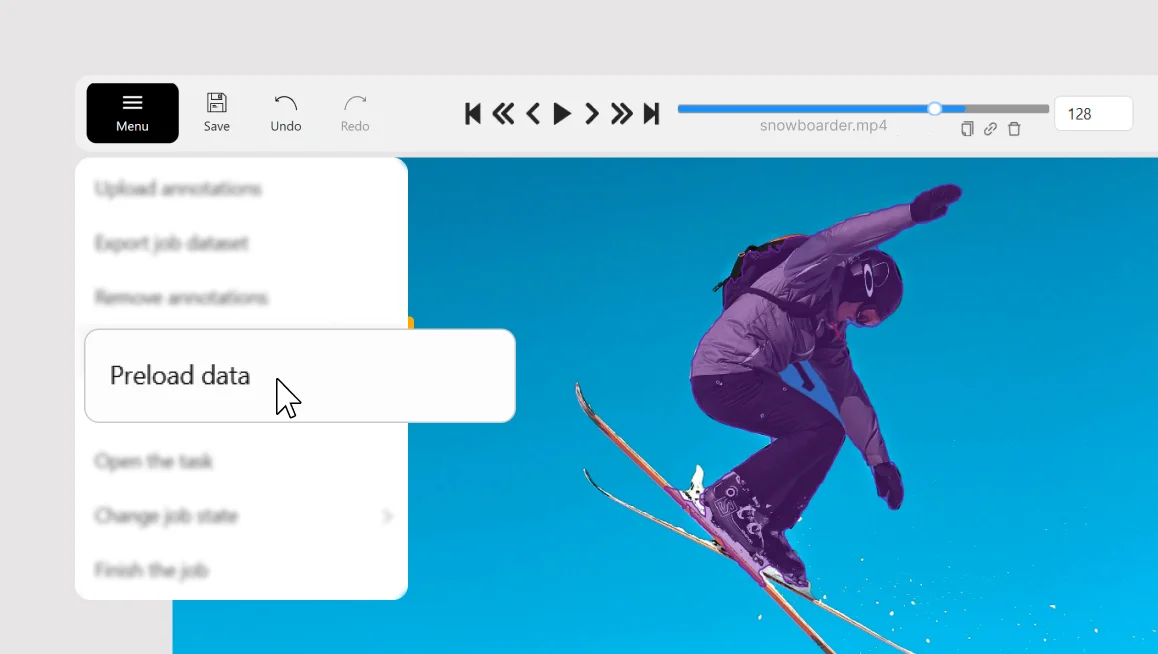

- Honeypots are available only for image annotation tasks and aren’t supported for ordered sequences such as videos.

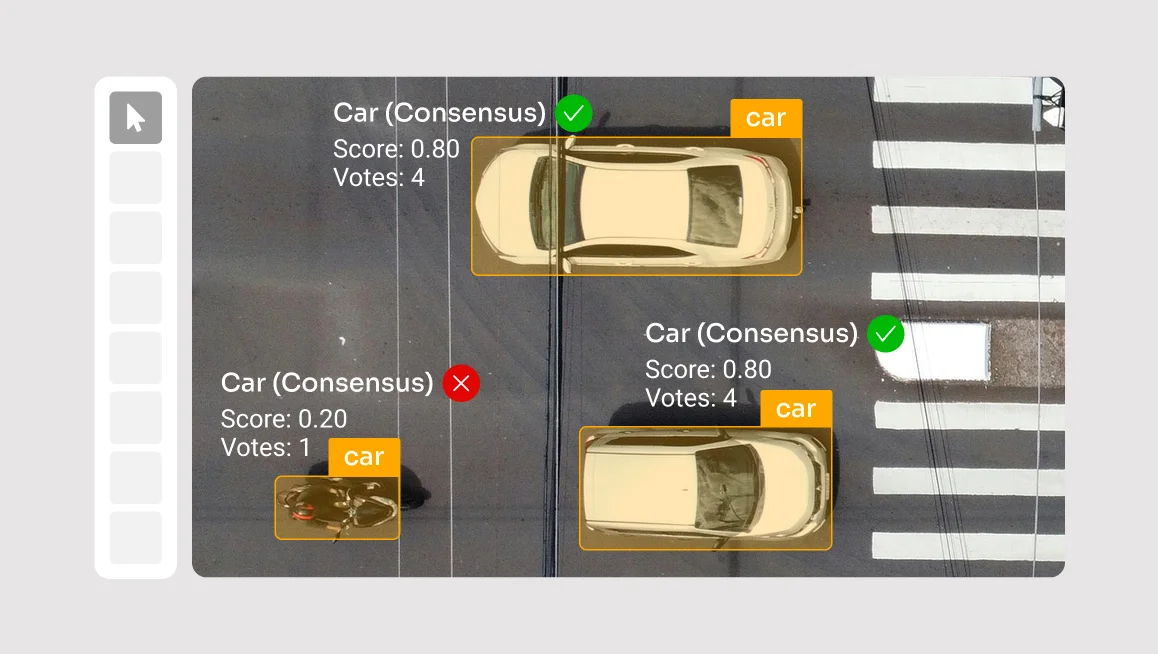

Next, these validation frames are randomly mixed into regular annotation jobs. While your annotators work on the project, CVAT tracks how accurately annotators handle these ‘hidden’ honeypot frames.

After the job is done, you can go to the analytics page to see all the errors and inconsistencies within the project. There, you get quality scores and error analyses for each job, the validation frame, and the whole task. No need for extra tools or manual comparison. Just label, review, and improve.

For more detailed setup instructions, shape comparison notes, and analytics explanations, read our full guide.

Try Automated QA and Honeypots Today

The Honeypots QA mode is available out of the box in all CVAT versions, including the open-source edition. However, using it for quality analysis requires a paid subscription to CVAT Online or the Enterprise edition of CVAT On‑Premises.

Ready to catch bad labels before they bite? Sign up or log in to your CVAT Online account to try honeypots today, or contact us to enable them on your self-hosted Enterprise instance.

.svg)

.png)

.png)

.png)