Data labeling is the process of assigning meaningful tags or annotations to raw data, such as images, text, audio, or videos, to make it usable for training AI and machine learning models. It can be done manually by human annotators or automatically using pretrained models integrated in data annotation tools.

As AI adoption grows, so does the demand for large, high-quality labeled datasets. However, manual labeling is often slow and resource-intensive. Automated and hybrid approaches address this challenge by accelerating the annotation process and enabling organizations to stay within timelines and budgets.

The goal of automated labeling isn’t to replace humans but to accelerate workflows and reduce costs by automating the most repetitive or straightforward tasks. This allows annotators to dedicate more time and effort to tricky or edge cases, leaving the routine work to algorithms.

How Manual Labeling and Automated Data Labeling Work

Automated data labeling services utilize machine learning models trained on previously labeled datasets. Once trained, these models can be seamlessly integrated into data labeling platforms like CVAT, automating most of the annotation workload.

While automation offers speed and scalability, the most effective strategy often combines it with human oversight — a method known as "human-in-the-loop." This hybrid approach strikes a balance between accuracy and relatively low cost, making it well-suited for real-world applications.

That said, not all scenarios are the same. Depending on the project requirements, data characteristics, and resource availability, there are three main approaches to data labeling: manual, automated, and hybrid.

- Manual Labeling: Human annotators manually review and assign labels to each data point.

- Automated Labeling: Software tools or algorithms automate the labeling process, eliminating the need for human intervention.

- Hybrid Approach: Combines manual and automated labeling methods: human annotators label a subset of data to create a high-quality, relatively small training dataset, which automated methods then use to extend labeling to larger datasets.

Manual Data Labeling

In this approach, human annotators manually review and assign labels to each data point, ensuring high accuracy and quality through careful judgment and attention to detail. This method is ideal when working with novel or sensitive data, edge cases, or tasks that require specialized domain expertise.

It is especially suited for projects where ground truth accuracy is paramount. This approach is commonly used in domains where precision is critical, such as medical imaging, autonomous driving, aerospace, and other fields where even minor errors can have serious consequences.

Automated Data Labeling: ML, Active Learning, Programmatic

Some tools and techniques allow data to be labeled automatically by machines, with little to no human involvement. Here are some examples:

- Machine Learning & Deep Learning Pre-Trained Models: Used exclusively for predictive labeling based on learned patterns without human validation.

- Active Learning (Automated Variant): In semi-automated setups, the model auto-labels data when it's highly confident, reducing the need for constant human input. However, for uncertain cases, human help is still essential, and therefore, this approach is on the fence, balancing between automated and hybrid techniques.

- Programmatic Labeling: Uses rule-based labeling logic implemented through scripts to handle clear-cut annotation tasks systematically. While by itself it is designed to work fully automatically, there are some concerns regarding this approach, and humans still intervene primarily in ambiguous cases or edge scenarios to ensure labeling quality.

These methods are effective for large-scale, repetitive tasks where patterns are clear and confidence is high, such as in e-commerce, content moderation, industrial inspection, and others.

Human-in-the-Loop: Hybrid Data Annotation Approach

Human-in-the-loop (HITL) annotation combines the strengths of both automated and manual labeling. The process starts with a small, manually labeled dataset, which is used to train an initial machine learning model. The amount of manual data required depends on your specific task and the complexity of your dataset; it often takes some experimentation to determine the optimal volume.

Once trained, the model begins labeling new, unlabeled data. These labels are then reviewed by human annotators, who identify and correct any mistakes.

The corrections made by humans are then given back to the model and, therefore, used to refine and improve it further. As the cycle repeats, the model becomes increasingly accurate and reliable, enabling automation to assume a larger share of the labeling work over time.

Human-in-the-loop annotation is particularly useful in domains where automation can accelerate labeling but human judgment remains essential, such as medical diagnostics, financial document processing, and automotive systems. It strikes a balance between efficiency and accuracy, making it ideal for evolving datasets or complex tasks where fully automated methods fall short.

Manual vs. Automated vs. Hybrid: When to Choose Each

Understanding when to select manual or automated labeling depends on several factors, including data complexity, scale, and the desired level of accuracy.

So, how do you know which approach is right for the job?

Use Manual Labeling When:

- You're working with new or sensitive data that contains many edge cases requiring special attention. Human intervention is critical in your case, and all data must be manually checked.

- The task is subjective or requires specialized domain expertise, and no suitable model is available.

- Ground truth accuracy is critical, for example, in safety-critical applications where lives may depend on the outcome.

Use Auto Labeling When:

- You're dealing with large volumes of data that follow consistent and repetitive patterns.

- You have a reliable, pre-trained, or previously developed model, and its performance is good.

- The goal is to maximize efficiency and output with minimal human involvement.

Use Hybrid Labeling When:

- You want to achieve a balance between accuracy, scalability, and costs.

- You can afford to invest time and resources upfront to create a high-quality labeled dataset that supports model training.

- The dataset is diverse—some parts are repetitive and straightforward, while others contain edge cases or require nuanced judgment.

- You plan to improve model performance continuously through iterative human feedback.

Auto-Labeling Annotation Techniques

In fully or partially automated data labeling pipelines, various strategies exist to minimize manual effort while maintaining high-quality labels. The choice of technique depends on the level of model maturity, data complexity, and project constraints.

Pretrained Models

Pretrained models are based on large, diverse datasets and are capable of delivering high-quality labels out of the box or with minimal fine-tuning. Examples like Meta's Segment Anything Model 2 (SAM 2) are particularly valuable for image segmentation.

Benefits:

- Delivers high-quality annotation with minimal setup.

- Useful in domains where labeled data is scarce or expensive.

- Accelerates annotation significantly when well-matched to the domain.

Challenges:

- May require fine-tuning or engineering for domain-specific tasks.

- Performance may degrade in niche applications or where the model’s training data lacks relevant examples.

Use case: In radiology or pathology, pretrained models can help segment organs or anomalies in scans and x-rays.

Active Learning

Active Learning is a machine learning approach where a model identifies the most informative or uncertain data points and asks a human annotator to label them. The idea is to improve model performance efficiently by prioritizing which examples to label, rather than labeling a random sample.

Benefits:

- Let human annotators prioritize labeling the most uncertain or valuable samples, handling all routine work.

- Improves model performance with fewer labeled examples.

- Reduces overall human labeling effort.

Challenges:

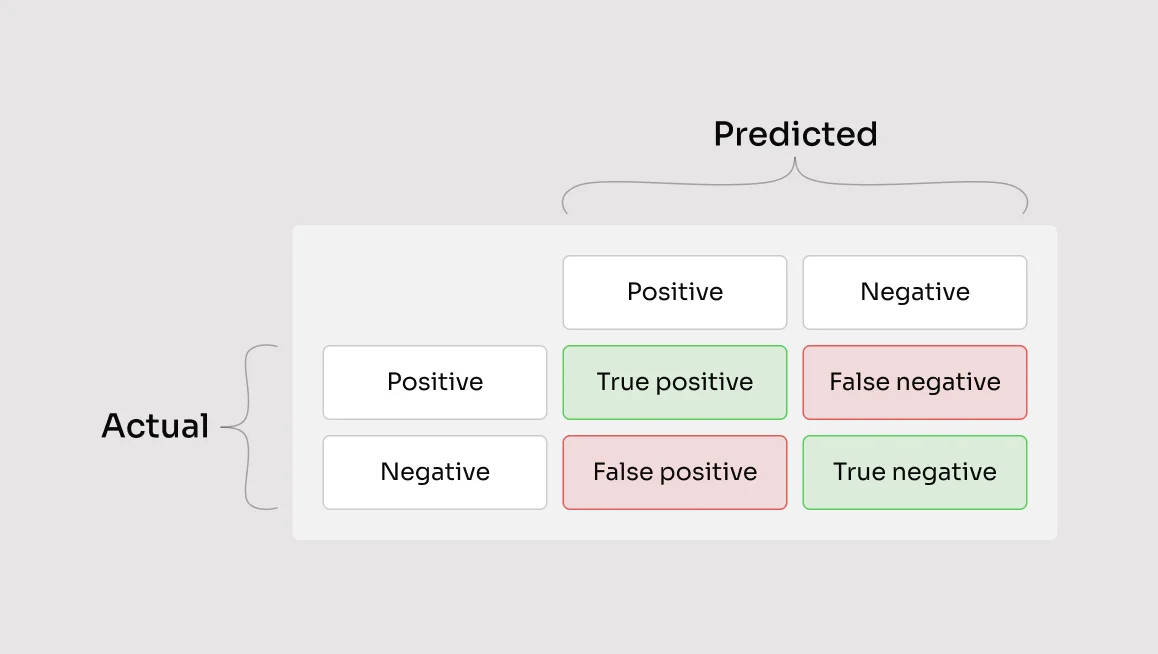

- Without human review, confidence thresholds alone may not prevent propagation of errors.

- Can mislabel edge cases if the model’s uncertainty estimation is poor.

Use Case: Training an object detection model for self-driving cars by automatically selecting and labeling frames where the model is least confident, therefore reducing the need to label every frame manually.

Programmatic Labeling

Programmatic labeling utilizes rules or scripts to assign labels to data automatically. It works best for straightforward cases where the logic is clear (e.g., keywords, patterns, etc.). While it's mostly automated, humans may still intervene to handle edge cases or review uncertain results to maintain high quality.

Benefits:

- Speeds up labeling for repetitive or clear-cut tasks.

- Scales easily with large datasets.

- Reduces the need for manual annotation in well-defined scenarios.

Challenges:

- Only works well when the labeling logic is consistent and straightforward.

- Struggles with ambiguous, messy, or context-heavy data.

- May need human oversight to handle exceptions or improve accuracy.

Use case: A system labels emails as “spam” or “not spam” using simple rules, such as checking for specific phrases ("win money", "free offer"), suspicious domains, or formatting patterns. This labeling is done automatically, but human reviewers may intervene to correct mistakes or update rules when spammers modify their tactics.

CVAT and Automated Labeling

CVAT offers a comprehensive range of automatic and hybrid labeling options, designed to meet different infrastructure needs, control levels, and user types.

Below is an overview of the four primary automation methods supported in CVAT.

Nuclio (CVAT Community and Enterprise)

CVAT integrates with Nuclio, a serverless framework for running machine learning models as functions. This framework is available in CVAT Community and Enterprise versions.

How It Works:

- Requires Docker Compose setup with a specific metadata file.

- Models (e.g., YOLOv11 or SAM2) are wrapped as Nuclio functions using a metadata file and implementation code.

- Once deployed, models are added to CVAT's model registry for use in auto-annotation.

Supported:

- Object detection (bounding boxes, masks, polygons)

- Tracking across frames

- Re-identification and interactive mask generation

Pros:

- Highly flexible; supports multiple model types

- Fully self-hosted and customizable

Cons:

- Requires some tech experience and Docker-level deployment

- Not available in CVAT Online

Third-Party Platforms Integration (CVAT Online and Enterprise)

CVAT supports model integration from third-party platforms Hugging Face and Roboflow, enabling annotation using externally hosted models.

How It Works:

- You can add models in CVAT -> Models Page

- Here is a helpful tutorial:

- Run the models to annotate your data, like in the video here

Pros:

- Easy integration if your models are already hosted on Hugging Face or Roboflow

- Easy to integrate and use, even for non-tech-savvy users

Cons:

- Relies on third-party service availability and APIs

- Slower due to data being sent frame-by-frame to remote servers

Auto-Annotation with CVAT CLI (CVAT Community)

Using CVAT's Python SDK and CLI, you can implement and run custom auto-annotation functions locally.

How It Works:

- Write the script

- Run the script locally with CVAT CLI to annotate a task

- Here is a helpful tutorial showing how to do it step by step

Pros:

- No server configuration needed

- Ideal for solo users and individual experiments

Cons:

- Requires local execution and sufficient machine resources

- No interactive annotation; everything is done through CLI

- Task-wide only

- Currently limited to detection models

Agent-Based Functions (CVAT Online and Enterprise)

CVAT AI agents are a powerful and flexible way to integrate your custom models into the annotation workflow, available on both CVAT Online and CVAT Enterprise, v2.25 and above.

How It Works:

- You start by creating a Python module ("native function") that wraps your model's logic using the CVAT SDK.

- Register the function with CVAT using the command-line interface (CLI). This only sends metadata (such as function names and labels), not the model code or weights.

- Then, run a CVAT AI agent that uses your native function to process annotation requests from the platform.

- When users request automatic annotation, the agent retrieves the task data, runs the model, and returns the results to CVAT.

Pros:

- Custom Models: Use models tailored to your specific datasets and tasks.

- Collaborative: Share models across your organization without requiring users to install or run them locally.

- Flexible Deployment: Run agents anywhere: on local machines, servers, or cloud infrastructure.

- Scalable: Deploy multiple agents to handle concurrent annotation requests.

Cons:

- Only detection-type models (bounding boxes, masks, keypoints) are supported.

- Requires tasks to be accessible to the agent's user account.

Conclusion

Automated data labeling is a powerful tool in the machine learning (ML) workflow arsenal. Used wisely, it can reduce costs and expedite labeling projects without compromising quality. The key lies in understanding your data, your goals, and the capabilities of the automation tools at your disposal.

Want to automate your annotation workflow with CVAT? Sign in or sign up to explore automation features in CVAT Online or contact us if you'd like to explore try CVAT's Enterprise automation features on your server.

.svg)

.png)

.png)

.png)