Picture a self-driving car avoiding incoming vehicles, turning at the right junction, and gently stopping at the traffic light. It waits patiently until the light turns green, and it gracefully rejoins the traffic. All without the driver lifting a finger.

We know that’s artificial intelligence (AI) technology at its finest. Or, specifically, computer vision, a term that machine learning engineers are familiar with. Advanced computer vision systems can identify objects in ways like humans do.

But how does it do so?

The answer — potentially hundreds of hours of videos, carefully annotated so that the computer vision model can learn and perceive the vehicle’s surroundings just like humans would.

Video annotation isn’t only helpful for innovating self-driving cars. Today, computer vision is at the heart of various applications, which underscores the importance of video annotation.

In this guide, we’ll explore:

- What video annotation is and why it matters.

- Video annotation benefits and applications.

- Types of video annotation.

- Challenges and best practices when annotating videos.

Let’s start.

What Is Video Annotation?

Video annotation is the process of labeling specific objects in a video based on labeling requirements. A human annotator or labeler would highlight specific parts of the video frame and add a label to it. The annotated video dataset then becomes the ground truth for training computer vision models.

By training itself on each of the annotated objects, the machine learning algorithm becomes more adept at associating visual data with real-world objects as humans see them. Video annotation is labor-intensive, requiring human labelers to patiently identify and classify multiple objects in frame after frame. That's why they often use automated video annotation software to speed things up.

Why Does Video Annotation Matter?

Startups and global enterprises are in a race to market state-of-the-art computer vision systems. By 2031, the computer vision market is predicted to hit US $72.66 billion. But to compete and thrive in this industry, relying on state-of-the-art computer vision models isn’t enough.

By itself, a computer vision model cannot correctly interpret objects from video data. Like other supervised machine learning algorithms, it needs to learn from datasets that have been curated and annotated for a specific application.

Let's take a traffic monitoring system as an example.

Without learning from the annotated dataset, the computer vision model can't identify cars, pedestrians, and other objects captured by the camera. Instead, the ML model sees only pixelated data, including contrast, hue, and brightness for each frame it passes.

But that changes when you annotate the video.

For example, you can place a bounding box on a car to teach the computer vision model to identify it as such. Likewise, you can train the model to identify pedestrians by drawing key points on the people.

We’ll cover more of this later. But the point is —video annotation makes computer vision smarter by training it to interpret video data just like we would with what we see in real life.

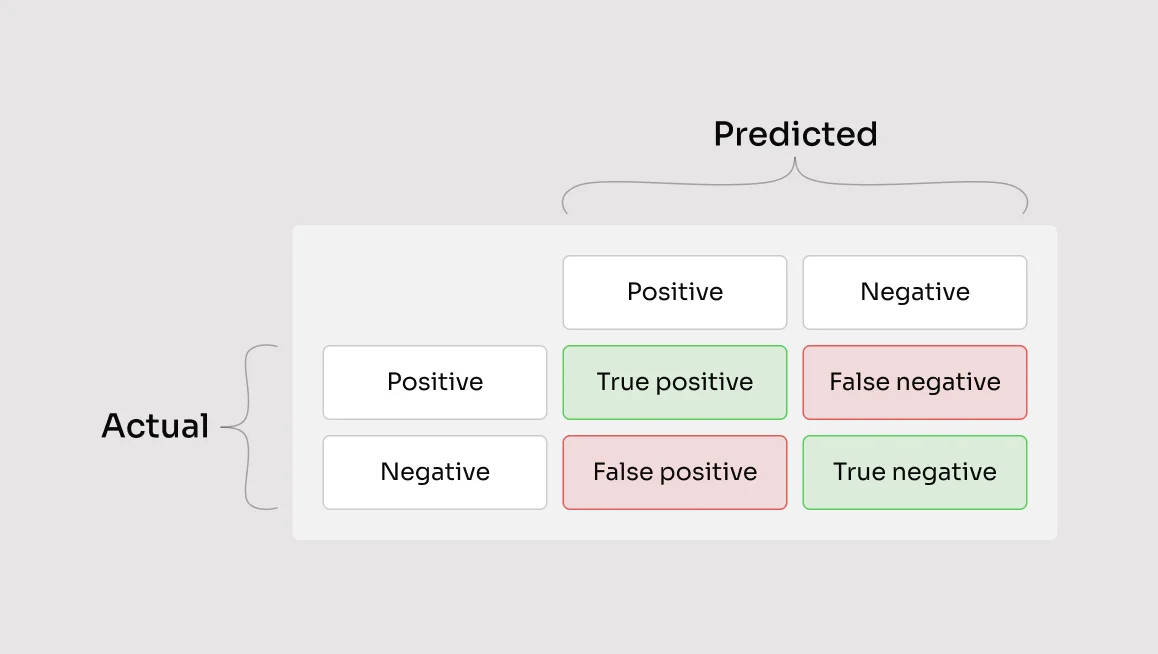

AI models operate with so-called "garbage" in principle. If you feed the model with low-quality datasets, it produces inaccurate results. Therefore, what’s equally critical is the dataset the model trains from, which calls for improved annotation quality.

Video Annotation vs. Image Annotation

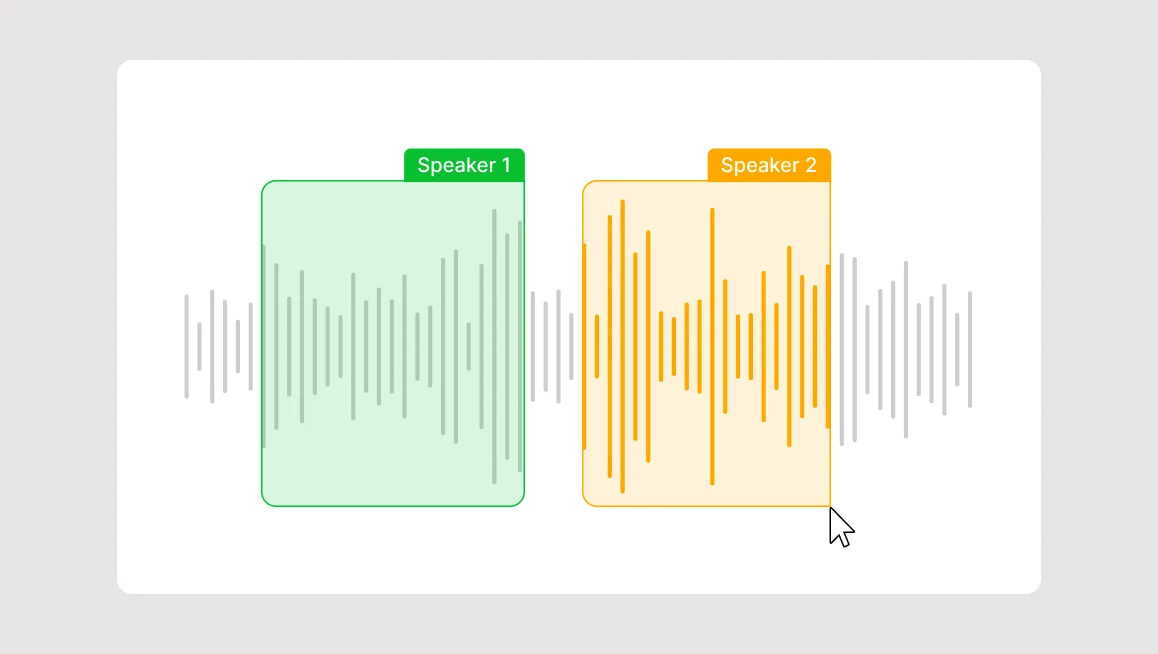

Video annotation is a subset of data annotation, which also includes image annotation. Some people might draw similarities between both types of annotation — and rightly so. After all, a video is essentially a sequence of image frames. Just like how you can draw a bounding box on an image, you can do so on a still frame in a video.

But while this makes them seem similar on the surface, the similarities quickly taper off. Unlike images, video is a dynamic sequence that captures movement and changes over time — offering temporal context that static visuals simply can’t provide. That’s why video annotation is a better fit for use cases where context across time matters — such as tracking object behavior, analyzing motion patterns, or observing changes in attributes throughout a scene.

This temporal dimension makes video annotation particularly valuable in domains like surveillance, sports analytics, autonomous driving, and human activity recognition — where understanding what happens between frames is just as important as what's happening in a single frame. Instead of labeling isolated moments, annotators can capture interactions, motion paths, and evolving object states, creating richer datasets for training more accurate and context-aware machine learning models.

That said, video annotation is inherently more complex because it involves sequential data. However, with automated data labeling tools like CVAT, this complexity becomes manageable — and, in many cases, even more cost-effective than manual image annotation.

So how do you know if video annotation is the right approach for your project? Use the checklist below to guide your decision:

Motion detection

Does your application need to track movement across time? If so, video annotation is essential. For example, annotating the trajectory of a ball in a tennis match helps train motion detection models — something image annotation alone can't support.

Behavior analysis

Do you need to understand how objects behave, interact, or change across multiple frames? Video enables you to capture sequences, like a person walking behind an object and reappearing—critical for models that need to infer intent, action, or interaction. For example, you can help a computer vision model recognize a person walking behind a pillar by tracking their movement across frames, even when they are partially obscured.

Cost efficiency

Are you annotating many similar frames? Automated tools like interpolation and object tracking can dramatically reduce manual effort in video annotation. Instead of labeling each frame individually, you label keyframes and let the software fill in the rest — saving time and lowering costs compared to doing the same with static images.

How Is Video Annotation Applied in Various Industries?

As computer vision evolves, so does adoption amongst industries. We share real-life applications where computer vision models, trained with annotated video, are making an impact.

Autonomous vehicles

At the heart of the vehicle is an AI-powered system that processes streams of video information in real life. The car can differentiate vehicles, pedestrians, buildings, and other objects accurately in real time. And this is only possible because the computer vision model that guides the vehicle was trained with annotated datasets.

Healthcare

Doctors, nurses, and medical staff benefit from imaging systems trained with annotated video datasets. Conventionally, they rely on manual observation to detect anomalies like polyps, cancer, or fractures. Now, they’re aided by computer vision to diagnose more accurately.

Agriculture

Computer vision technologies trained with properly annotated datasets can improve yield in agriculture. Farmers, challenged by monitoring acres of land and crops manually, leverage AI to optimize land usage, combat pests, fertilize plants, and more.

Manufacturing

Product defects, left unnoticed, can negatively impact manufacturers both financially and reputationally. Installing a visual-inspection system trained with annotated datasets allows for more precise quality checks. In addition, such systems also create a safer workspace by proactively detecting abnormal or unsafe situations.

Security surveillance

Another area where video annotation is sought after is security surveillance. CCTV cameras allow security officers to oversee people's movement in real time. However, they might need help in identifying suspicious behavior, especially when monitoring multiple feeds. With computer vision, untoward incidents can be prevented as the computer vision system picks up patterns it was trained to identify and promptly alerts the officers.

Traffic management

Traffic rules violations, congestions, and accidents are concerns that governments want to resolve. With computer vision, the odds of doing so are greater. Upon training, the AI model can analyze traffic patterns, recognize license plates, and identify accidents from camera feeds.

Disaster response

First responders need to make prompt and accurate decisions to save lives and property during large-scale emergencies. Computer vision technologies, coupled with aerial imagery, can help responders strategize rescue operations. For example, emergency teams send drones augmented with computer vision algorithms to locate victims affected by wildfires.

Types of Video Annotation

Video annotators use different techniques when labeling datasets. Depending on the project requirements, they might label an object with techniques like bounding boxes. Or if they need to train the model to capture pose or movement, they’ll use keypoint annotation.

A skilled annotator knows how to use and combine various techniques according to the labeling requirement.

Bounding boxes

A bounding box is the simplest type of annotation you can make on a video. The annotator would draw a rectangle over an object, which is then tagged with a label. It’s suitable when you need to classify an object and aren’t concerned about separating background elements. For example, you can draw a rectangular box over a dog and tag it as an animal.

Polygons

Like bounding boxes, polygons enclose an object in a video frame. However, you can remove unwanted background information by drawing the polygon according to the object’s outline. Usually, we use polygons to label complex, irregular objects.

Polylines

Polylines are sequences of continuous lines drawn over multiple points. They are helpful when you’re annotating straight-line objects across frames, such as roads, railways, and pathways.

Ellipses

Ellipses annotations are oval-shaped and drawn across objects with similar geometrical outlines. For example, you can use ellipses when annotating eyes, balls, or bowls.

Keypoints & skeletons

Some video annotation projects require pose estimation and motion tracking. That’s where keypoint and skeleton annotation come in handy. Keypoints are tags assigned to specific parts of the object. For example, you assign keypoints to body joints and facial features. Then, the machine learning algorithm could track how they move relative to each other. On top of that, you can join various keypoints to form skeletons, which helps track body movement more precisely.

Cuboids

Cuboids allow computer vision models to annotate 3D objects with a rather uniform structure, such as furniture, buildings, or vehicles. You can add spatial information, such as orientation, size, and position in cuboids, to train computer vision models. For example, annotators use cuboids when training a traffic surveillance system to identify vehicles.

Automated annotation

Even with a diverse range of annotation tools, labeling dozens of hours of video can be painstaking. Instead of manually tagging objects, you can automatically label them with a video annotation tool like CVAT. Once configured, our software automatically finds objects that you want to label in the video and tags them accordingly. Then, you review them to ensure they’re accurate and make changes if necessary.

Learn more about how automated annotation works in CVAT.

How to Do Video Annotation for Computer Vision

Whether you want to train an autonomous vehicle or identify human faces, you start by labeling the datasets. If you’ve never done any, follow these steps that experienced video annotators use.

Step 1: Define your annotation requirement

Every annotation project is different. Know what you need to label in the video and consider the complexity of doing so. For example, categorizing people in public areas is relatively simple. But tracking individual movements requires more effort. From there, decide on which data annotation tool to use.

Step 2: Choose the right video annotation tool

Not all data annotation tools are suitable for video annotation tasks. Some lack advanced features, such as automatic annotation or semantic segmentation, that help you save labeling time and cost. Besides annotation features, pay attention to project management capabilities, user-friendly interface, data security and quality assurance features when choosing data labeling software.

Step 3: Upload video data

Next, prepare the video that you want to annotate. In some cases, you might need to resize, denoise, or extract certain frames so that you can improve the video quality. After that, import the video file to the annotation software.

Step 4: Annotate the video dataset

Create a class for the object you want to label. Then, use appropriate tools, such as bounding boxes, polygons, or skeletons, to label the objects. You can identify keyframes, tag objects in them, and interpolate those in between. This allows you to annotate faster without going through every single frame.

Step 5: Review the annotation

Mistakes might happen during annotation, with or without automated labeling tools. So, it’s important to check the annotated frames thoroughly before using the dataset to train the computer vision model. Look for mistakenly annotated objects, missing labels, and other inconsistencies.

Key Challenges in Video Annotation

Video annotation is key to enabling state-of-the-art computer vision applications. But creating accurate and consistent datasets remains challenging, even for experienced annotators. If you’re starting a video annotation project, be mindful of these challenges.

Labeling inconsistency

Human labelers play a vital role in video annotation, regardless of the tools you use. Therefore, annotation results are subject to individual interpretations. For example, one annotator may classify a dog as a Poodle, while the other may label it a Toy Poodle. Both are similar but not the same as far as machine learning algorithms are concerned.

So, to avoid misinterpretations, provide your team with clear labeling specs. You can read more about it here.

Inadequate training

Before they annotate, labelers must receive proper training to ensure they’re familiar with the video annotation process, tools, and expectations. Otherwise, you risk compromising the outcome with inaccurate labeling, reworks, and costly delays.

Immense datasets

Video data are larger than their textual and image counterparts. So, the time and effort spent on annotating video frames might take up considerable resources that not all companies can spare.

Explore the advanced data annotation course we offer to upskill your annotators.

Data security and privacy

Video annotation requires collecting, storing, and processing large volumes of videos, some of which might contain sensitive information. You need ways to secure datasets throughout the entire labeling pipeline and comply with data privacy laws.

Project timeline

Time to market is another concern that puts additional pressure on annotators. By itself, video annotation is a laborious process. Plus, if they use manual tools, delays might happen as they’ll need to spend time addressing labeling issues.

We know that video labeling can be very tedious, even if you’re equipped with the right tool. That’s why we help companies save time and costs with professional video annotation services.

Best Practices when Annotating Videos

Don’t be discouraged by the hurdles that might complicate video annotation. By taking precautions and smarter approaches, you can improve annotation quality without committing excessive resources.

Here’s how.

Automate when you can

Don’t hesitate to automate the labeling process. Sure, automatic annotation is not perfect. You’ll likely need to review all the frames to ensure they’re correctly labeled. But don’t forget, automatic automation saves tremendous time, so you can accelerate your computer vision development with faster labeling and reduced costs.

If you use CVAT, you can take automated labeling further with SAM-powered annotation. We integrate SAM 2, or Segment Anything Model 2, with our data labeling software to enable instant segmentation and automated tracking of complex objects.

Align video quality with annotation goals

We know that annotators have little or no control over the video they annotate. But on your part, try to ensure the recordings are high quality to start with. Then, use a video quality appropriate to the labeling requirements. For example, if you want to annotate large objects, you can use lower-quality video clips. This way, you save a lot of time on intermediate steps, such as creating tasks, downloading videos, buffering, and automated labeling. Meanwhile, high-quality video is preferable when you need pixel-level precision annotation.

Keep labels and datasets organized

Video annotation can get out of hand quickly if you don’t stick to an organized annotation workflow. Overlapping classes, misplaced datasets, and other confusion can limit your video annotator’s productivity. Thankfully, they can be addressed if you’re using a user-friendly data annotation tool.

Interpolate sequences with keyframes

You don’t need to label every single frame in a video. Instead, you can assign keyframes in between predictable sequences and interpolate them. Trust us; this will save you lots of time.

Set up a feedback system

Annotators need feedback from domain experts and machine learning engineers to know if they’re labeling correctly. Likewise, any updates in labeling requirements must be communicated to the entire team. Usually, good data annotation software is equipped with a feedback mechanism that streamlines communication.

Import shorter videos

Long videos clog up bandwidth if you’re uploading them to an online annotation tool. If you don’t want to spend hours waiting for the video to load, break it into smaller ones. Preferably, keep the videos below the 1-minute mark.

Conclusion

Video annotation is key to creating accurate computer vision models. Compared to image annotation, video labeling provides more details and is more practical in most real-world applications. That said, video annotation isn’t without challenges. If you want to annotate video, you need to address concerns like dataset volume, labeling consistency, and annotator expertise.

Hopefully, you found useful tips in this guide to improve your annotation quality and reduce time to market. Remember, a data annotation tool equipped with automated features helps a long way in video annotation.

Explore CVAT and annotate video more effectively now.

.webp)

.svg)

.png)

.png)

.png)