We’re excited to announce another special addition to our automatic and model-assisted labeling suite: Hugging Face Transformers.

Hugging Face Transformers is an open-source Python library that provides ready-to-use implementations of modern machine learning models for natural language processing (NLP), computer vision, audio, and multimodal tasks.

The library includes thousands of pretrained models, including a broad selection of computer vision models that you can now connect to CVAT Online and CVAT Enterprise for automated data annotation.

The current integration supports the following tasks:

- Image classification

- Object detection

- Object segmentation

All you need to do is pick a model you want to label your dataset with from Transformers library, connect it to CVAT via the agent, run the agent, and get fully labeled frames or even entire datasets, complete with the right shapes and attributes in a fraction of the time.

Annotation possibilities unlocked

Just like with Ultralytics YOLO and Segment Anything Model 2 integrations, this addition opens up multiple workflow optimization and automation opportunities for ML and AI teams.

(1) Pre-label data using the right model for the task

Connect any supported Hugging Face Transformers model that matches your annotation goals—whether it’s a classifier, detector, or segmentation model—and run it directly in CVAT to pre-label your data. Each model can be triggered individually, enabling you to generate different types of annotations for the same dataset without scripts or external tools.

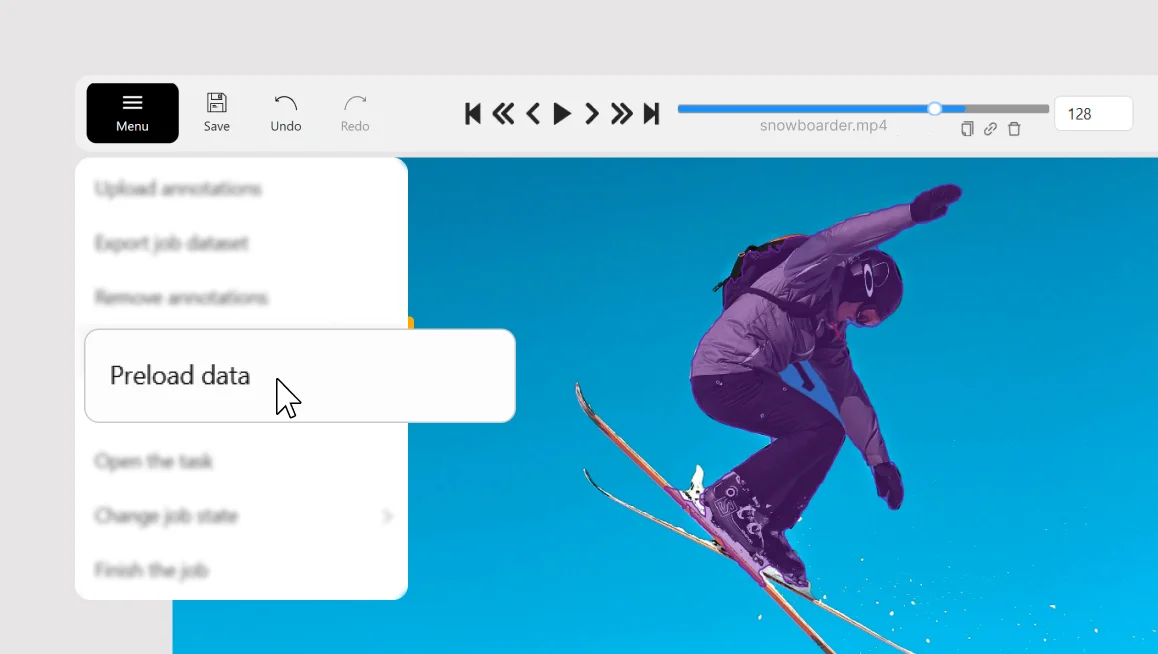

(2) Label entire tasks in bulk

Working with a large dataset? Apply a model to an entire task in one step. Open the Actions menu and select Automatic annotation. CVAT will send the request to your agent and automatically annotate all frames across all jobs, reducing manual effort and repetitive work.

(3) Share models across teams and projects

Register a model once and make it instantly available across your organization in CVAT. Team members can use it in their own tasks with no local setup, ensuring consistent labeling workflows at scale.

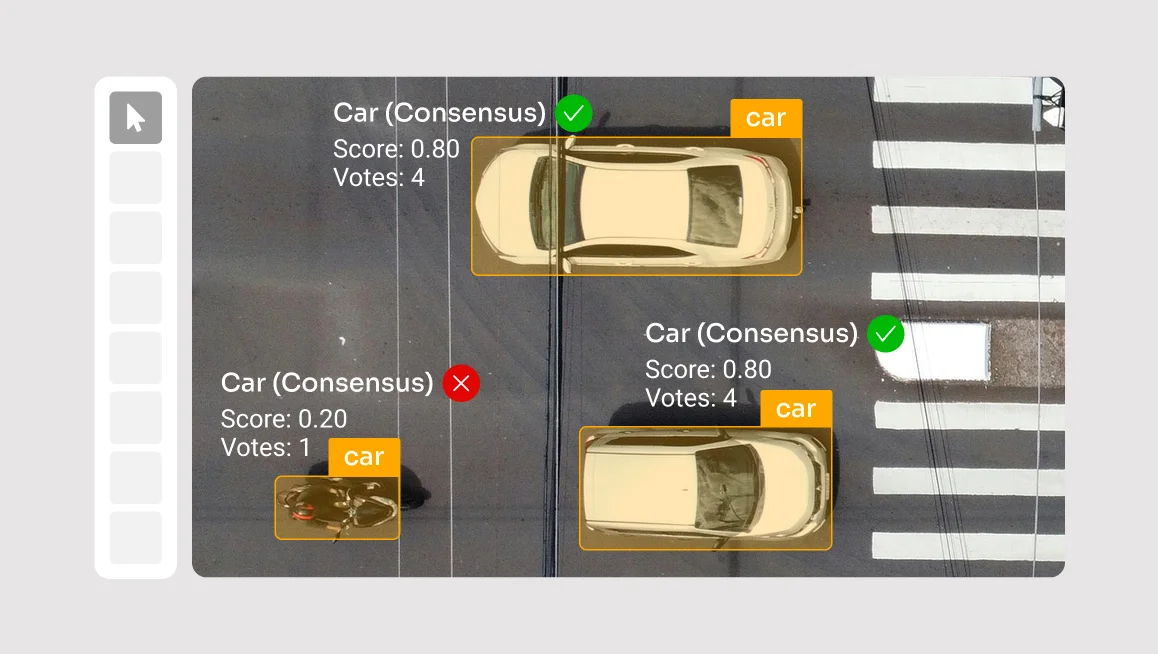

(4) Validate model performance on real data

Evaluate any custom or fine-tuned Hugging Face Transformers model directly on annotated datasets in CVAT. Compare model predictions with human labels side-by-side, identify mismatches, and spot edge cases—all within the same environment.

How it works

Step 1. Register the function

Create a native Python function that loads your Hugging Face model (e.g., ViT, DETR, or segmentation transformers) and defines how predictions are returned to CVAT. Register this function via the CLI.

Note: The same function works for both CLI-based and agent-based annotation.

Step 2. Start the agent

Launch an agent through the CLI. It connects to your CVAT instance, listens for annotation requests, runs your model, and returns predictions back to CVAT.

Step 3. Create or select a task in CVAT

Upload your images or video and define the labels, depending on your evaluation needs and model output.

Step 4. Choose the model in the UI

Open the AI Tools panel inside your job and select your registered Hugging Face model under the corresponding tab.

Step 5. Run AI annotation

CVAT sends the request to the agent, which performs inference and delivers predictions back in the form of annotation shapes tied to the correct label IDs.

Get started

Ready to enhance your annotation workflow with Hugging Face Transformers? Sign in to CVAT Online and try it out.

For more information about Hugging Face Transformers, visit the official documentation.

For more details on CVAT AI annotation agents, read our setup guide.

.svg)

.png)

.png)

.png)