When we picture a fully operational robot, we imagine a machine that can see, move, and react to its surroundings with precision. But behind that capability lies one crucial process: data annotation.

As a robot moves around and interacts with the world, it is receiving massive streams of information from cameras, LiDAR sensors, and motion detectors, but this data is meaningless until it’s labeled and structured. That’s where data annotation comes in, and turns those raw inputs into recognizable patterns that machine learning models can interpret and act upon.

Think of annotation as the bridge between raw data and intelligent behavior, with each bounding box, 3D tag, or labeled sound clip teaching a robot how to move, grasp, or navigate.

Why Is Data Annotation in Robotics Unique?

First and foremost, data annotation in robotics is distinct because it must handle multiple sensor inputs and fast-changing environments. The uniqueness comes from three main factors:

- Variety of data types: A warehouse robot can capture RGB images, LiDAR depth maps, and IMU motion data at the same time. Annotators need to align these streams so the robot understands what an object is, how far it is, and how it is moving.

- Environmental complexity: A robot navigating a factory floor may see drastically different lighting between welding zones, shadowed aisles, and outdoor loading bays. It also must track moving forklifts, shifting pallets, and workers walking across its path. Annotated data must represent all these variations so models do not fail when conditions change.

- Safety sensitivity: If an obstacle in a 3D point cloud is labeled incorrectly, a mobile robot may misjudge clearance and strike a shelf or worker. For instance, Amazon’s warehouse AMRs rely on accurately labeled LiDAR data to avoid collisions when navigating between racks. Meaning that even a small annotation error, like misclassifying a reflective surface, can cause a robot to stop abruptly or make unsafe turns.

Beyond this, robotics is also unique because it is scaling at a pace few industries match.

According to the International Federation of Robotics (IFR) ‘s World Robotics 2025 Report, the total global installations of industrial robots in 2024 were 542,076 units, more than double what was installed a decade earlier.

The capabilities of general-purpose robots have also advanced rapidly in 2024–2025. A clear example is Figure’s 01 robot, which in 2024 successfully performed a warehouse picking task using OpenAI-trained multimodal models.

Achieving this required large volumes of annotated 3D point clouds, motion trajectories, and force-feedback data, illustrating how teams like Figure and Agility Robotics rely on richly labeled sensor inputs to enable robots to operate safely in human environments.

These innovations show how dynamic robotics has become, and why precise, context-aware annotation is essential. As robots expand into more real-world tasks, their performance depends directly on the quality of the data used to train them.

Key Use Cases of Data Annotation in Robotics

Robots rely on labeled sensor data to understand the world around them and make safe, reliable decisions. In practice, data annotation powers several core capabilities that appear across nearly every modern robotics system:

- Autonomous navigation, where labeled images, depth maps, and point clouds help robots detect obstacles, follow routes, and adjust to changing layouts.

- Robotic manipulation, which depends on annotated grasp points, object boundaries, textures, and contact events so arms can pick, sort, or assemble items accurately.

- Human–robot interaction, supported by labeled gestures, poses, and proximity cues that allow robots to move safely around people and predict their intentions.

- Inspection and quality control, where annotated visual and sensor data help robots detect defects, measure alignment, or identify early signs of wear.

- Semantic mapping and spatial understanding, where labels on floors, walls, doors, racks, and equipment help robots build structured maps of their environment.

A widely referenced example comes from autonomous driving research. The Waymo Open Dataset provides millions of labeled camera images and LiDAR point clouds showing vehicles, pedestrians, cyclists, road edges, and environmental conditions. These annotations train Waymo’s autonomous systems to detect obstacles, anticipate movement, and navigate safely in dense urban environments.

These use cases demonstrate how annotation turns raw sensor streams into actionable understanding, forming the backbone of safe and capable robotic behavior.

Types of Data Annotation in Robotics and Their Applications

Robotics relies on diverse data sources, each requiring a specific annotation method. From vision to motion, labeling ensures robots can interpret and act safely in dynamic environments. These annotated datasets power navigation, manipulation, and autonomous decision-making across industries.

Visual and Image Annotation

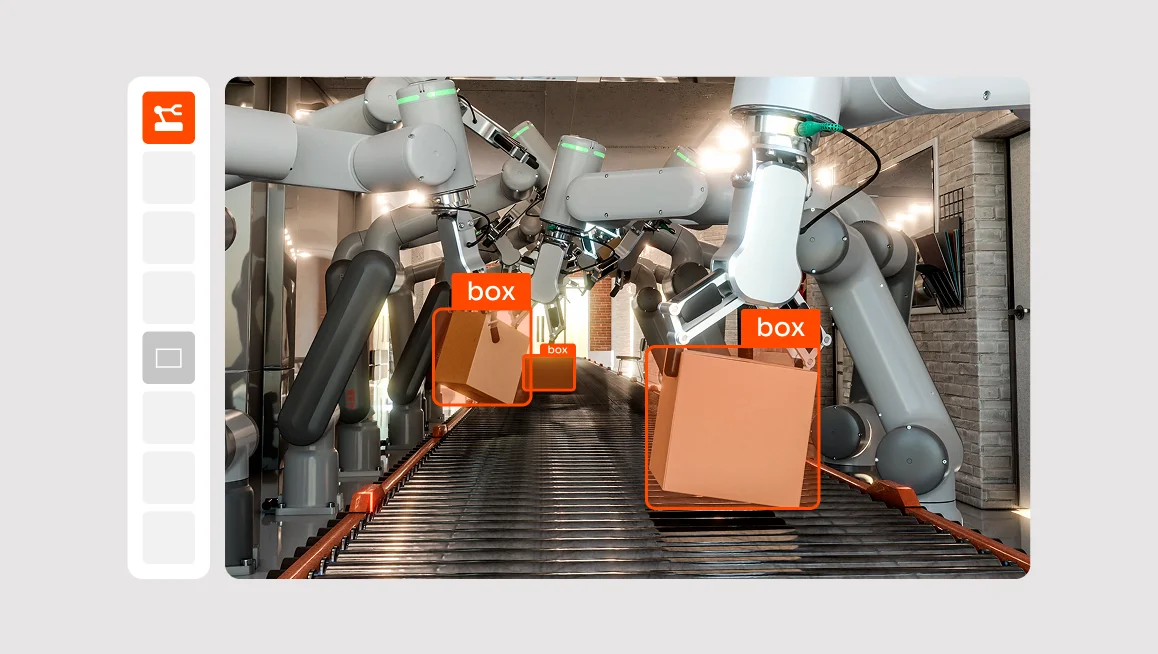

Cameras give robots their sense of sight, but the data needs structure. That’s where annotators outline and classify objects using bounding boxes, polygons, and segmentation masks.

Common visual annotation tasks include:

- Object detection and classification

- Pose and gesture tracking

- Instance segmentation in complex scenes

One example of visual/image annotation is Ambi Robotics, which uses large annotated image datasets to train sorting arms to recognize boxes and barcodes. Platforms like CVAT streamline this process with AI-assisted labeling, frame interpolation, and attribute tagging.

3D Point Cloud and Depth Data Annotation

LiDAR and depth sensors capture spatial geometry, forming dense 3D point clouds that need precise labeling. In this case, annotators define objects, surfaces, and distances so robots can move safely through real-world spaces.

Autonomous systems like Nuro’s delivery vehicles depend on this annotation to recognize pedestrians and road edges.

For those using CVAT, we support 3D point-cloud annotation with cuboids, allowing teams to mark and classify objects. CVAT also provides an interactive 3D view, frame navigation, and integrated project management features, giving robotics teams a unified platform within a single environment.

Sensor and Motion Data Annotation

Robots depend on sensor feedback to monitor movement and performance. For example, annotating accelerometers, gyroscopes, or torque data helps identify start points, motion shifts, and anomalies.

In industrial settings, this labeling improves precision and predicts faults. Which is why companies like Boston Dynamics and ABB Robotics use it to refine robot motion and detect wear.

Audio and Tactile Data Annotation

Sound and touch give robots environmental awareness. So annotators will label motor noises, ambient sounds, and tactile pressure data so systems can detect irregularities or adjust handling.

Key annotation areas include:

- Detecting mechanical or ambient sound changes

- Labeling tactile feedback and slip events

- Linking sensory data with visual or motion inputs

Robots from Rethink Robotics and RightHand Robotics use such annotations to refine grip and texture handling. Integrated platforms like CVAT, combined with audio or haptic plugins, allow engineers to visualize and align these sensory streams for more adaptive learning models.

Best Practices for Effective Annotation in Robotics

Robotics annotation requires accuracy, consistency, and clear processes. These best practices help teams create safer, more capable robotic systems.

Create Detailed, Robotics-Specific Annotation Guidelines

Robotics data varies widely across sensors, environments, and behaviors. Clear guidelines ensure annotators understand how to handle occlusions, fast-moving objects, and overlapping sensor data.

For example, a warehouse robot may record an object partially hidden behind a pallet. Guidelines should specify how to label the object’s visible area and how to tag occlusion attributes so the model learns realistic edge cases. Consistent rules across image, LiDAR, and depth frames prevent drift as datasets scale.

Use Tools Designed for Multi-Sensor and 3D Workflows

General-purpose labeling tools often struggle with robotics workloads that include point clouds, video frames, IMU data, and synchronized sensor streams. Tools like CVAT support 3D cuboids, interpolation for long sequences, and multi-modal annotation, which reduces errors when labeling spatial data.

For instance, with CVAT, annotators can mark a forklift in a 3D point cloud and maintain label continuity across hundreds of frames using interpolation rather than redrawing boxes manually.

Combine Automation with Human Oversight

Automation accelerates labeling but cannot replace expert review. AI-assisted pre-labeling is useful for repetitive tasks such as drawing bounding boxes or segmenting floor surfaces, but humans must refine edge cases, ambiguous objects, or safety-critical scenes.

A typical workflow might use automated detection to pre-label all pallet locations in a warehouse scan, while a human annotator fixes misclassifications, handles cluttered areas, or corrects mislabeled reflections or shadows.

Label with Deployment Conditions in Mind

Robots operate in environments that shift constantly. Annotations must include examples of glare, motion blur, low light, irregular terrain, and human interaction and other edge cases to make sure the model behind the robot can perceive and act reliably in unconstrained, safety-critical settings .

For example, an autonomous robot navigating a distribution center should be trained with labeled images captured during daytime, nighttime, and transitional lighting so it performs reliably across all shifts.

Leverage Synthetic and Simulated Data When Real Samples are Limited

Simulated data allow teams to create rare or dangerous scenarios that are difficult to capture safely in the real world. Synthetic point clouds, simulated collisions, or randomized lighting conditions help models generalize more effectively.

A good use case is robotic grasping: simulation can generate thousands of synthetic grasp attempts on objects of varying shapes and materials, providing a foundation before fine-tuning on real-world captures.

Maintain Strong Versioning and Traceability

Robotics datasets evolve over months or even years. Version control allows teams to track which annotations led to which model behaviors, helping diagnose regressions or drift.

For example, if a navigation model starts failing on reflective surfaces, teams can trace the issue back to a specific dataset version where labeling rules changed, and correct the underlying annotation pattern.

Taken together, these practices make robotics datasets more reliable and scalable, ensuring models continue to perform accurately as environments, hardware, and operating conditions evolve.

The Biggest Challenges of Robotic Data Annotation

Robotic data annotation is uniquely demanding because robots operate in fast-changing, unpredictable environments. Unlike static image datasets, robotics data combines multiple sensor types that must be labeled in sync. A single scene may include RGB video, LiDAR point clouds, IMU readings, and depth data, all captured at different rates. Aligning and annotating these streams accurately is one of the biggest technical hurdles.

Scale is another challenge. Even a simple warehouse AMR requires millions of frames and 3D scans. Reviewing, labeling, and validating that volume of data requires careful workflows, automation, and strong quality control.

Plus, safety sensitivity raises the stakes further. Mislabeling an obstacle in a point cloud or marking an incorrect grasp point can lead to collisions, dropped objects, or erratic behavior. Because robots act on these labels in the real world, the cost of an annotation error is significantly higher than in many other AI domains.

Together, these challenges make robotic annotation a complex, high-precision task that demands specialized tools, domain expertise, and rigorous verification.

How Does CVAT Support Robotics Data Annotation?

With CVAT, teams can annotate bounding boxes, polygons, segmentation masks, and 3D cuboids all within one environment. Plus, features like advanced 3D visualization, and collaborative project management make it suitable for both research teams and large enterprises handling complex robotic datasets.

CVAT is available in two deployment models.

With CVAT Online, teams can scale projects instantly without managing infrastructure.

For enterprises with strict data control or compliance needs, CVAT Enterprise offers the same power within a secure, private environment.

Both are built on CVAT’s open-source foundation and share the same high-performance tools for labeling and review.

Beyond software, CVAT also offers data labeling services for robotics companies that need expert annotation but lack internal capacity. These services help teams accelerate development by delivering high-quality labeled data quickly, without building an in-house annotation team.

Together, CVAT’s flexible platform options and professional services make it a complete solution for robotics companies that need reliable, scalable annotation pipelines to power next-generation automation.

Annotation is Teaching Robots to See the World Clearly

The future of robotics depends on how well machines can interpret their surroundings, and data annotation is what turns streams of raw information into actionable insight, teaching robots to perceive, decide, and adapt.

As robotics expands into new sectors like logistics, healthcare, and manufacturing, the need for accurate annotation grows just as quickly. This means that building reliable, safe, and intelligent systems requires structured workflows, skilled teams, and the right technology.

In the end, every intelligent robot starts the same way: with precise, thoughtful annotation that helps it see the world as clearly as we do.

Ready to build smarter, safer robots? Explore how CVAT can streamline your annotation workflow and accelerate your robotics projects today.

.svg)

.png)

.png)

.png)