Modern farming is undergoing a rapid evolution that rivals the industrial revolution, this time driven by the power of robotics and artificial intelligence. While it may not be the first industry that comes to mind when you think of AI, it is one where we are seeing the most growth, with Startus Insights predicting that the global AI market in agriculture is projected to reach USD 4.7 billion by 2028, growing at a CAGR of 23.1%.

Furthermore, Nathan Boyd, a professor at the University of Florida, predicts that artificial intelligence will provide a 35% boost to agricultural production by 2030.

The global AI market in agriculture is projected to reach USD 4.7 billion by 2028, growing at a CAGR of 23.1%.

These statistics highlight how modern AI is finding use cases in agriculture and moving the industry far beyond simple mechanization to autonomous equipment that significantly boosts production.

But these tools aren’t a simple plug-and-play. Today’s autonomous farming equipment all depends on large volumes of accurately labeled data to interpret crops, soil conditions, machinery paths, and livestock changes with the level of precision agriculture demands.

Understanding The Unique Aspects of Agricultural Data Annotation

Data annotation in agriculture spans both crop production and livestock management. Farms need labeled data to monitor plant growth, soil conditions, and yield patterns, while also tracking animal health, movement, and behavior across barns, pastures, and open ranges.

The process is also unique in agricultural settings because it must capture the shifts across seasons, growth stages, and daily environmental changes. These challenges stem from three core factors:

Diverse Visual and Sensor Conditions

Agricultural environments shift constantly, and those changes affect both crops and livestock. Crops progress through distinct growth stages that alter their appearance, and livestock show their own variations as animals grow, change coats, move in groups, or interact with equipment and fencing.

These challenges become more complex when farms rely on multiple sensor types. RGB imagery captures visible detail for plants and animals, while multispectral and NDVI data reveal crop stress, moisture levels, and vegetation health that the human eye cannot see. Thermal sensors or depth cameras may track livestock body temperature, movement patterns, or herd density.

Each modality brings different visual signatures and labeling requirements, increasing complexity across both plant-focused and animal-focused datasets.

Biological Complexity and Natural Variability

Agriculture deals with living systems, which means conditions rarely stay consistent. Crops grow unevenly, shift in color and structure week by week, and often share features with weeds that closely mimic their shape and leaf patterns.

These similarities make it challenging for annotators to distinguish between desirable and invasive plants, especially in fields where early signs of disease, nutrient stress, or pest damage appear subtle and easy to overlook without expert guidance.

Livestock introduces its own layer of biological variability. Animals differ in size, posture, coat pattern, and behavior throughout the day, and these changes can affect how models detect movement, health indicators, or herd interactions. Young animals look different from adults, certain breeds share nearly identical markings, and early signs of illness may only show through slight posture shifts or reduced activity. Labeling such nuances requires careful observation and domain expertise across both plant and animal datasets.

Scale and Geographic Diversity

Agricultural datasets often span very large areas, sometimes covering entire fields, orchards, pastures, or multiple barns. These datasets can include thousands of images collected from drones, tractors, fixed cameras, handheld devices, or automated barn systems. Because farms vary widely in layout, terrain, and management style, annotators must work with imagery captured at different angles, distances, and resolutions while keeping labels consistent across large and diverse spaces.

Geography adds another layer of complexity for annotation work. A model trained on crops from one region may struggle in another if soil color, plant varieties, livestock breeds, or climate conditions differ significantly. Even subtle changes in vegetation density, lighting, or ground texture can affect how objects appear.

For livestock settings, factors like breed coloration, pasture vegetation, or barn materials may shift the visual context entirely. Ensuring annotations remain reliable across such varied environments is essential for creating models that generalize well beyond a single location.

Together, these factors make agricultural datasets some of the most variable and context-dependent in computer vision. The challenges are unlike those in controlled indoor environments because every field introduces new combinations of light, weather, soil texture, plant structure, and biological change.

The good news is, once these complexities are captured and structured, they unlock a wide range of practical applications.

Key Use Cases of Data Annotation in Agriculture

Once agricultural datasets are accurately labeled, they can be used to train AI systems that take on some of the most time-consuming and knowledge-intensive tasks on a farm. Some of the most common use cases include the following.

Crop Detection and Classification

Crop detection relies on annotated images that distinguish plant species, identify growth stages, and reveal early signs of stress. An accurate dataset helps models understand subtle visual differences in leaves, canopy density, and row structure.

With consistent labeling, AI systems can map fields, detect weeds, and assess crop vigour automatically. This allows farmers to target interventions only where needed, reducing waste and improving yield precision.

A practical example is John Deere’s See & Spray platform, which uses millions of annotated field images to identify weeds in real time, allowing farmers to reduce herbicide use.

Soil and Land Condition Analysis

Soil and land-condition datasets help segment field zones, identify erosion risks, and guide variable-rate irrigation or fertilization. Models trained on these annotations can highlight areas that need attention before problems become visible.

To achieve this, farmers and agribusinesses routinely use data derived from NASA’s SMAP mission, which produces labeled soil-moisture measurements that support irrigation planning and drought monitoring across major crop-growing regions.

Disease Recognition

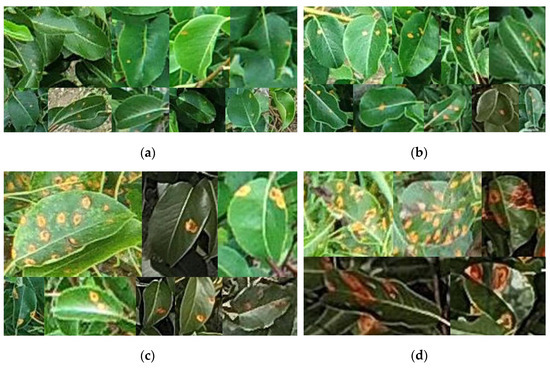

Disease detection is another major use case on many modern farms. Here, researchers and agritech teams build datasets using annotated images of leaves, stems, or fruit captured at various growth stages to teach AI systems to recognize disease symptoms such as spotting, discoloration, or deformation.

And because real-world conditions (lighting changes, shadows, overlapping leaves, varying plant morphology) introduce noise and complexity, reliable annotation is key to minimizing false positives/negatives and ensuring that models generalize beyond lab-standard images.

To do this on a large scale, teams often rely on annotation tools such as CVAT to generate high-quality labeled datasets. For example, a 2024 study using drone-based imagery for orchard disease detection reported creating over 16,000 annotations of leaves (in a set of 584 images) to train an object-detection model.

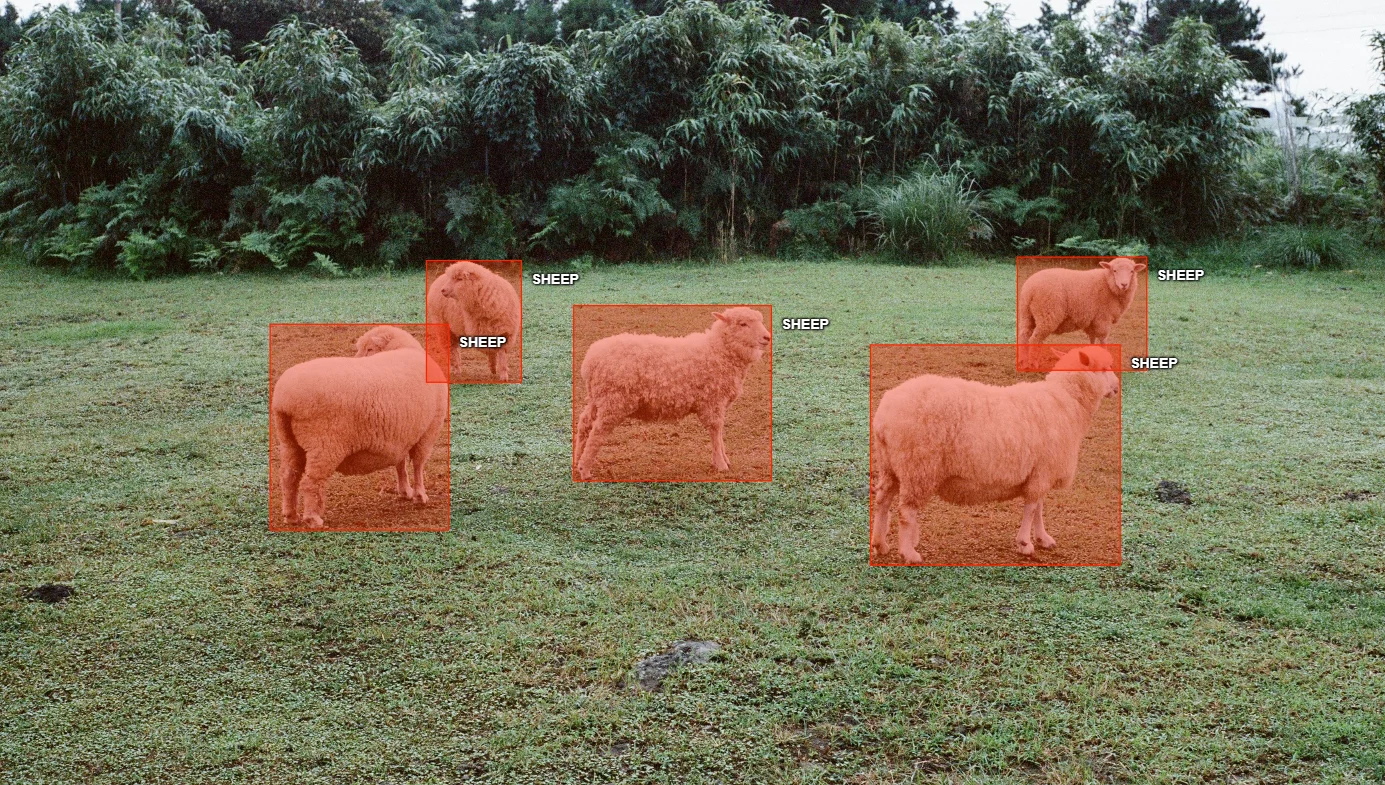

Livestock Monitoring and Behavior Tracking

Livestock monitoring often relies on richly annotated video and image datasets that identify individual animals, track their movements, and classify physical behaviours over time. Annotation is critical here because its labels allow AI systems to interpret posture, gait, feeding patterns, herd distribution, and even detect signs of distress or lameness.

Because natural farm conditions can involve variable lighting, occlusion, group clustering, and changing backgrounds, high-quality annotation is critical for ensuring models work robustly outside controlled environments.

For example, the CVB dataset, collected under natural lighting and annotated using CVAT, comprises 502 video clips (each 15 seconds long) and covers 11 visually perceptible cattle behaviours including grazing, walking, resting, drinking, ruminating, and more.

Another notable real-world example comes from a 2023 study on pig movement estimation: researchers used CVAT to annotate images with different colourful bounding boxes for individual pigs, explicitly marking occlusions when pigs overlapped or movement was obstructed. This enabled multi-object tracking that underpins reliable activity and mobility analysis, an approach that could be adapted to cattle or other livestock.

Types of Annotation Used in Agriculture

We've covered the crucial use cases for AI on the farm but how do we actually teach the AI to do these tasks? That's where specific annotation techniques come in.

Object Detection with Bounding Boxes

The bounding box is probably the easiest way to annotate data. This technique is popular because it's fast and simple, perfect for high-volume tasks where you just need to know the general location of something rather than its exact shape.

In the agricultural world, bounding boxes are often used for yield estimation. For example, rapidly counting apples, strawberries, or grain heads to predict harvest volume. This approach is all about processing massive amounts of data quickly.

A couple of other common applications for bounding box annotation include:

- General Weed Detection: Identifying patches of unwanted plants for broad-spectrum treatment.

- Livestock Counting: Tracking the number of animals in a herd from aerial drone footage.

For agricultural projects, using tools like CVAT allows annotators to quickly label thousands of frames using keyboard shortcuts. This efficiency is critical when processing the vast datasets required to train robust detection models.

Labeling with Polygons and Masks

Simple bounding boxes excel at defining the general location of an object, but advanced applications demand a much higher level of precision.

Consider automated spot-sprayers in agriculture, which must apply chemicals to a tiny weed without affecting the surrounding crop. Achieving this surgical accuracy requires data labels that trace the exact outline of the object, which is accomplished using both Polygon and Mask annotation methods.

Polygons store the object as a vector outline defined by a series of coordinates connected by straight or curved segments. This method is often faster for human annotators to draw manually, offering good accuracy, but it is limited by the number of points used and can struggle with minute details.

Masks, conversely, store the object as a pixel-accurate region, meaning every single pixel is designated as either belonging to the object or not. This offers the highest possible accuracy, capturing fine edges and irregular outlines, and is the native input required by many advanced segmentation models.

Choosing between a polygon and a mask comes down to a trade-off between speed and necessary fidelity. This is what you must consider when deciding on a labeling strategy for a new project:

- Precision Level: Masks provide pixel-perfect accuracy, which is required when extreme detail (like mapping the exact spread of a tiny fungus) is necessary. Polygons are sufficient when only a high-fidelity outline is needed for detection or simpler segmentation.

- Annotation Speed: Polygons are generally quicker to draw by hand, making them more efficient for simpler shapes. Masks are slower for manual tracing but can be significantly faster when using AI-assisted tools like Segment Anything Model (SAM).

- Model Requirement: Models performing semantic or instance segmentation typically require a pixel-level input, making the Mask format the standard and most compatible output. Polygon coordinates are often converted to a mask format internally by the model if they are used for segmentation.

CVAT supports both of these high-precision tools, including polygon drawing tools and a dedicated Brush tool for mask annotation. By offering these distinct options, CVAT ensures that data scientists can select the optimal method to train models on the true morphology of plants, enabling hyper-accurate tasks like robotic harvesting or targeted spot spraying to be executed successfully.

3D Point Cloud Annotation

Autonomous farm equipment operates in complex three-dimensional environments, meaning annotators must also label objects and structures within the 3D spatial geometry captured by LiDAR. This is what allows tractors, sprayers, and robotic harvesters to navigate fields, obstacles, and surrounding terrain.

To label this data, annotators use 3D cuboids to define the size, distance, and orientation of obstacles. It's essentially the same core technology used in self-driving cars, adapted to help autonomous machinery navigate the unpredictable terrain of a farm, detecting everything from fences to ditches in low visibility.

This 3D data is crucial for:

- Autonomous Navigation: Helping tractors detect fences, ditches, and utility poles in low-visibility conditions.

- Canopy Volume Analysis: Measuring the density of fruit tree branches to automate pruning equipment.

- Obstacle Avoidance: Ensuring robots stop for workers, animals, or equipment left in the field.

CVAT provides a specialized 3D environment where annotators can label objects in three dimensions. This capability is vital for AgTech companies developing fully autonomous field machinery that must operate safely without human intervention.

Video Annotation and Object Tracking

Video annotation captures movement by tracking objects across time, which is essential for understanding behavior and patterns. Instead of labeling one image at a time, annotators track a subject, like a single cow or a drone's flight path, across an entire sequence of frames.

This focus on movement allows AI models to understand context, velocity, and direction, which drives advancements in things like detecting lameness in livestock or creating cohesive, stitched-together maps from drone footage.

Video annotation is commonly used for:

- Livestock Behavior Monitoring: Detecting signs of lameness or tracking feeding habits over time.

- Drone Flight Analysis: Stitching together video feeds to create cohesive maps of large fields.

- Growth Monitoring: Tracking the development of specific plants over days or weeks.

Tools like CVAT excel here by offering interpolation, as well as AI-assisted tracking with SAM2, proprietary, and fine-tuned ML models which automatically pre-label objects with shapes between keyframes. This drastically reduces the manual effort required to label long video sequences, making it feasible to process hours of field footage.

Best Practices for Building Reliable Agricultural AI Datasets

Moving from understanding the types of annotation and the tasks annotations are used for to actually executing a successful project requires building a reliable dataset, which is difficult, because unlike data projects in controlled environments, agricultural data is inherently messy and chaotic.

To build an AI that can handle the unpredictability of fields, you have to prioritize consistency, clarity, and the relentless pursuit of edge cases. To do this, follow these foundational practices to ensure your model doesn't just work in a testing lab, but delivers accurate, profitable results when the sun is setting, the tractor is shaking, or the disease is barely visible.

1. Account for Real-World Environmental Conditions

The natural world is unpredictable, and this is the biggest challenge for agricultural AI. Models trained only on sunny, clear images of mature plants will immediately fail when faced with shadows, dust, fog, or early-stage growth. To make a reliable dataset, annotators must actively seek out and label the data points where the sensor input is poor or the visual differences are subtle.

This means you need to intentionally collect and annotate challenging data, such as footage taken at dusk, imagery from under dense tree canopies, or examples of objects partially obscured by mud.

By feeding the model these "hard examples," you prevent model overconfidence and build a system that can maintain high performance and safety across all hours, seasons, and weather conditions.

2. Develop Unambiguous Labeling Protocols

Ambiguity in labeling is the fastest way to ruin a dataset. That is why annotators need precise specifications of what constitutes a "weed" (Does it include dead weeds? Is it a tiny seedling count?) or how much of a disease spot must be present before it's classified as "severe." Without this clarity, multiple annotators will inevitably label the same data differently, severely reducing data consistency.

Therefore, every agricultural annotation project must start with a living document of visual guidelines. These guides should include examples of every object class, detailed rules for overlap and occlusion, and specific instructions for handling sensor noise.

3. Implement a Continuous Feedback Loop (Active Learning)

Annotation isn't a one-time process; it's a cycle. After an initial model is trained, it's inefficient to keep labeling data randomly. A much better strategy is to use the model's performance to guide the next round of human labeling through a process called Active Learning. This approach identifies the data points the model is most uncertain about.

By prioritizing these uncertain examples for human annotation, you maximize the impact of every hour spent by your labeling team. This allows the model to quickly learn from its weaknesses. Furthermore, incorporating field experts (agronomists, crop specialists) into the review process ensures that the most complex, high-value labels are validated by human domain knowledge before the data is fed back into the training loop.

Bring AI to Your Agricultural Project with CVAT

Ultimately, the future of precision agriculture isn't just about faster machines; it's about smarter ones. As this guide shows, making your AI systems reliable, accurate, and safe depends entirely on the quality of the data they are trained on (just like the quality of soil is vital for the health and nutrients of plants).

By adopting the best practices of agricultural data annotation, you can ensure your models deliver real-world value.

One last important consideration to note:

The complexity of agricultural data, involving everything from satellite images to 3D point clouds, demands a versatile and powerful annotation platform.

That is why agricultural teams are turning to CVAT, which supports precise workflows for tasks like segmentation and object detection while streamlining operations. To handle these demands, the platform provides a robust suite of features designed for scalability:

- Complete manual annotation toolset including bounding boxes, ellipses, masks, polygons, and polylines.

- Model-assisted pre-labeling via SAM2, Ultralytics YOLO, Roboflow, Hugging Face, and custom models.

- Advanced QA tools including ground truth management, honeypots, and immediate job feedback.

- Deep analytics to track project progress and annotator performance.

- Comprehensive team management with granular permissions and distinct project-task-job logic.

If you’re ready to move beyond basic crop counting and build truly reliable agricultural AI, CVAT can streamline your annotation workflow and accelerate your agriculture project.

- Start with CVAT Online, if your team wants to scale labeling projects instantly without managing infrastructure.

- If your project requires labeling within a secure, private environment, CVAT Enterprise offers the same power, yet supports fully on-premise deployment, SSO, and other features for secure, and compliant labeling.

.svg)

.png)

.png)

.png)